Case Study: Improving Verity Nature’s field apps using apptest.ai

In this case study, we’ve interviewed Justin Baird, Verity Nature’s (https://www.veritynature.com) CTO about how he and his team have been using apptest.ai to improve test coverage and quality assurance.

Thanks for joining us Justin, first of all tell us about Verity Nature?

Thanks for having me! At Verity Nature, we are building applications that help to empower technicians and project developers to be able to digitize every step of the measurement, reporting and verification process required for nature-based carbon sequestration projects. By doing so, we are helping to deliver transparency and integrity to the voluntary carbon market.

Many of our projects operate in remote wilderness areas where there is no Internet connection. With users out in the field and offline, we demand flawless functionality across devices, ensuring the quality and reliability of the measurements taken. With these requirements, our software quality assurance program operates with uncompromising specifications, and we are always on the lookout for innovative solutions that can help make our solutions even more robust.

Cool, so how did you hear about Apptest and what do you like about it?

As our product development has progressed, and we have brought in more features across a number of different user types, we have been needing to move beyond our current testing regime. I was looking for automated solutions, but also wanted to see if I could find any AI solutions to make it faster to deploy and test more scenarios than just the ones we come up with ourselves.

A friend sent me a link to Apptest.ai and I’ve been impressed with the solution, it pretty much does what I had in mind about using AI to test apps, it’s all already in there. What I like about it is that it has enabled our test team to do more and achieve higher quality results faster, with the same number of testers.

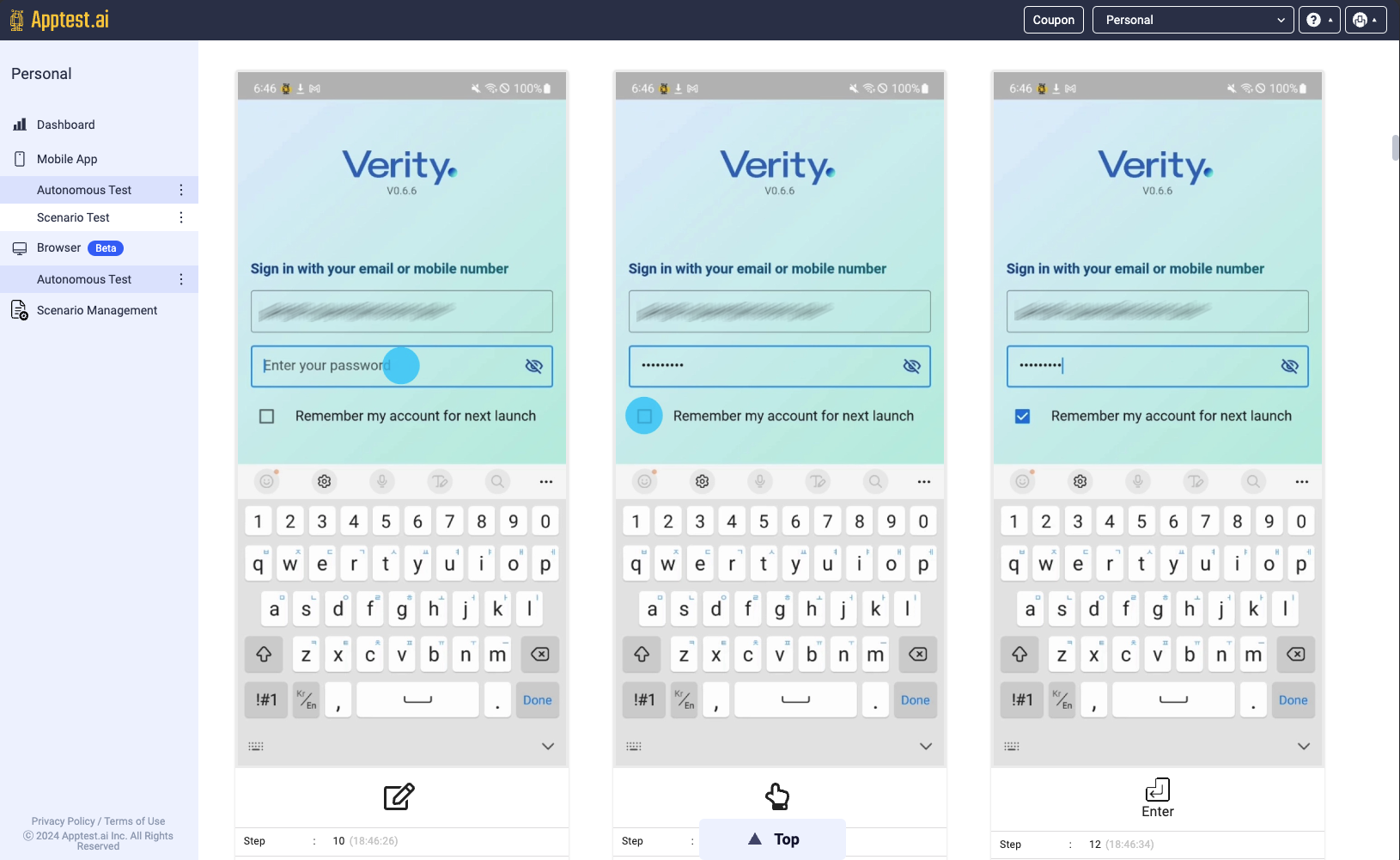

When you’re doing testing for a new functionality, you can’t just go and immediately automate the whole process. A tester will have to play around, see how things feel, see what happens with the new functionality on one device compared to another. This kind of experimentation is necessary, and also time consuming. What I really like about the Apptest AI is that it does this kind of stuff for you.

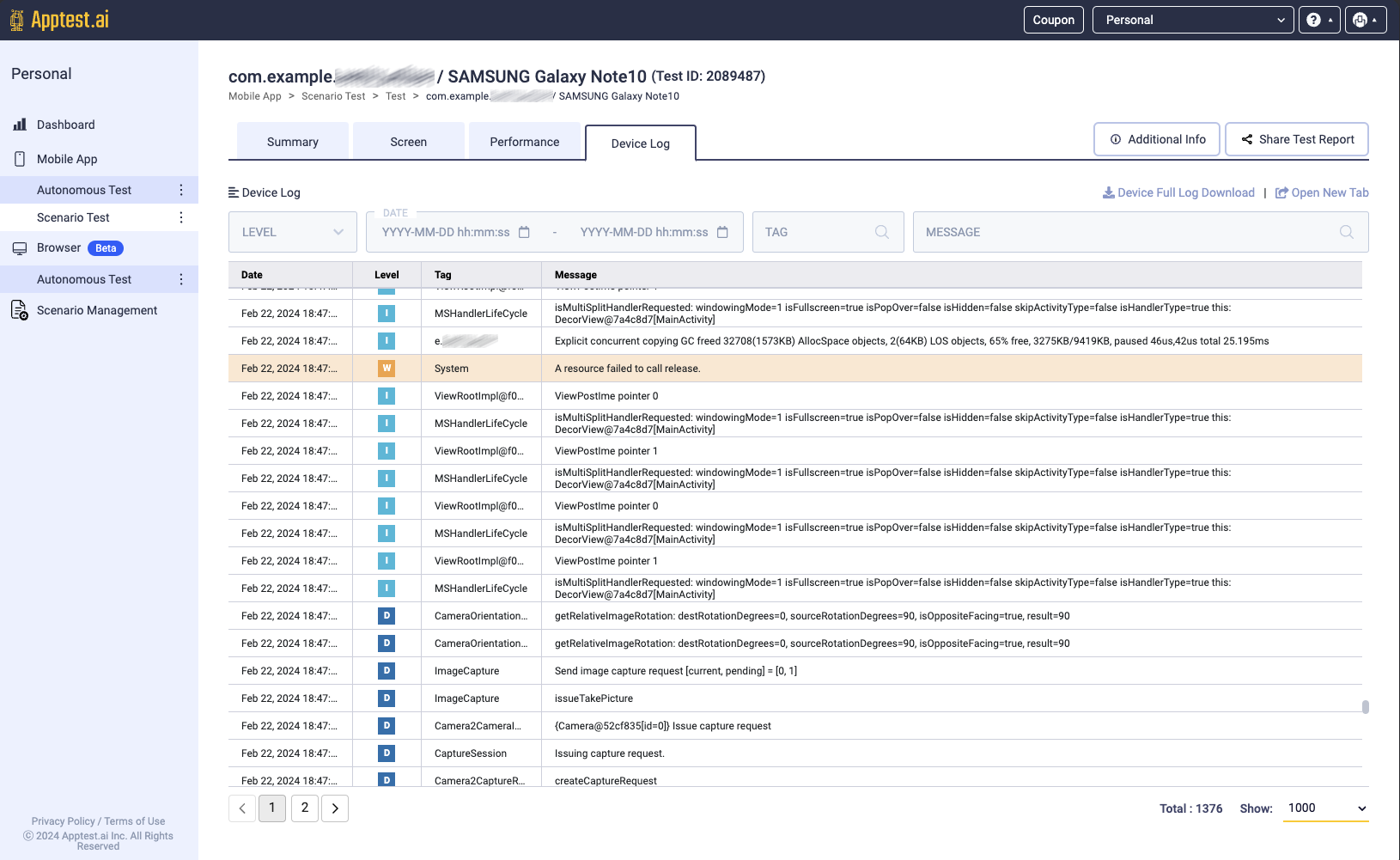

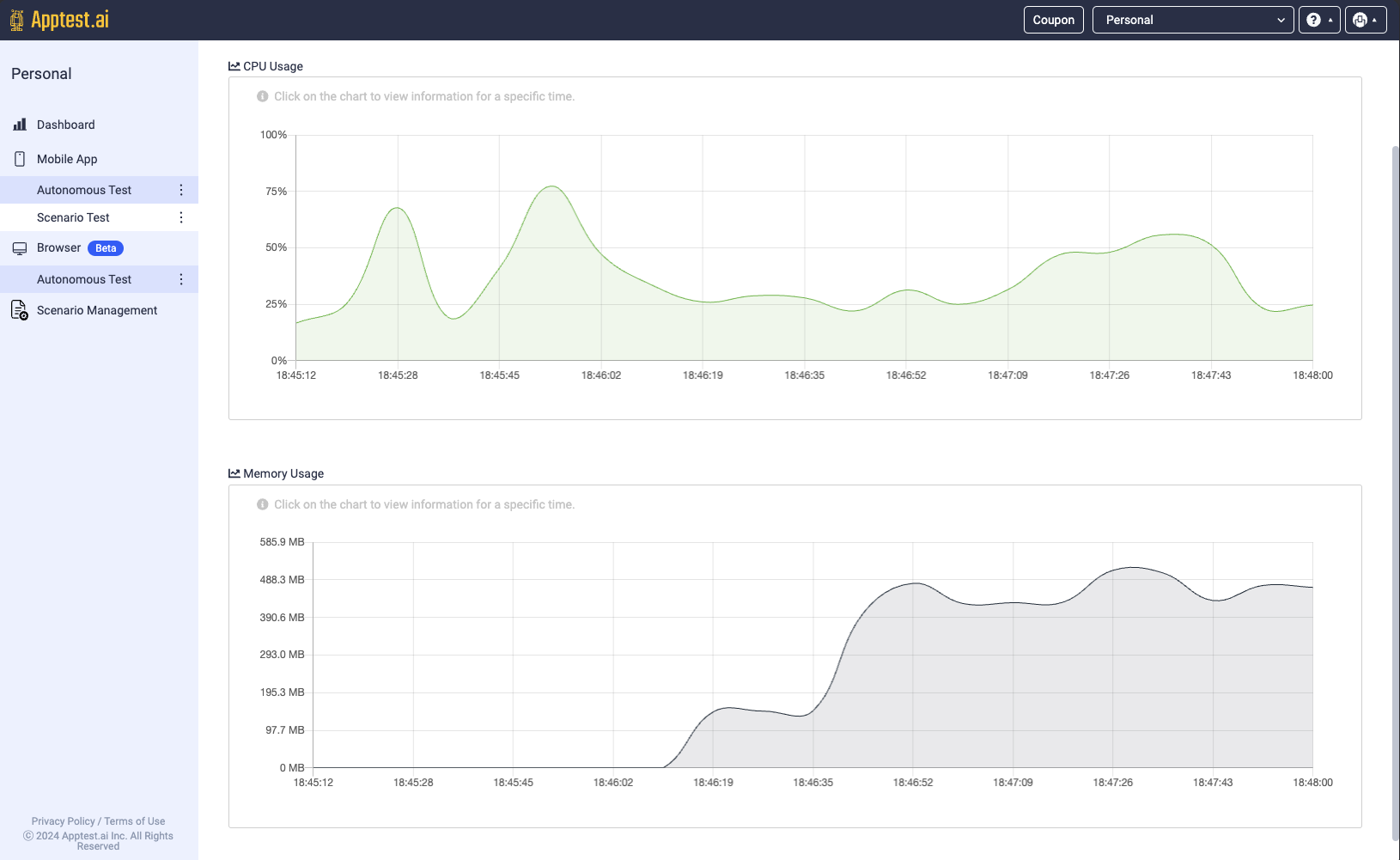

It does a bunch of iterations, figuring out where the buttons are, where to log in, what to press to go to the next page, and so on. And the dashboard shows you all of these iterations, with screen grabs of all the steps and iterations, logs of every step of the testing process, and dashboard views of app performance on every device you test on.

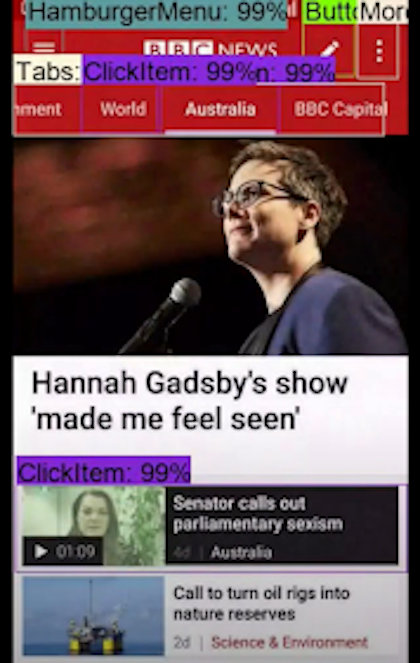

Apptest AI is able to automatically identify and recognise all the user interface elements on a page. In this figure, it detects the items that can be clicked, like menus, buttons and search boxes.

This is where having an AI do all of this experimental and iterative testing lets our testers focus their time on more meaningful work. Also, the mobile test farm is really cool.

Mobile test farm, what’s that?

Oh yeah, so that’s my own name for the Apptest testing platform. At the Apptest data center, they have a big rack of a huge number of devices. That’s how you can take your app, upload it to the service, and then run the app on dozens of devices at the same time. This in itself saves a huge amount of time and a lot of investment. We didn’t have to buy every flavor of Android phone out there to test our app ourselves, we can use Apptest’s devices instead.

Also, we have some apps that need to be tested on low-end devices for some projects. Working with the Apptest team, I sent them 3 of our low-end target devices, and they put them up in their mobile test farm for us. Now we can test with those devices along with all the others, and let the AI figure out the issues for us 🙂

Anything you think we can improve to make testing even easier for you?

Yes, while we have definitely saved a lot of time, getting things up and running at the very beginning is a bit challenging! To get this all working well, it does require a lot of things to be configured and set up correctly. It’s a bit of a new paradigm to do testing like this, so it took a little while for our testers to get used to having an AI assistant, and how to best leverage this new resource.

Also we build tablet apps, and this was a bit more of a struggle to make work with the AI because we ran this locally with Apptest’s Stego application, since the mobile device rack doesn’t have tablets available. I think this would be a great addition to the mobile test farm.

Thanks Justin, any final thoughts?

Sure, so Apptest has helped us save a lot of time and headaches, and made our test team more efficient, but the things I’ve found most valuable are the automated test scenarios and comprehensive coverage across most any device you’d ever need to test on. I think that’s the super cool part, it saved us a lot of expense in having to otherwise buy all those phones ourselves. I also think it’s a great tool for most any startup to try, and any mobile app development agencies too.