Enhancing Mobile Testing Workflow with Stego

Verity Nature are utilizing our Stego solution to streamline their mobile application testing process across a wide range of devices, including older and low-cost phones commonly found in regions like East Africa.

By integrating Stego into their workflow, Verity Nature has been able to automate tests, reduce manual effort, and improve overall app quality. Below is their story, detailing how Stego has helped them enhance their development process.

At Verity Nature, we are developing an innovative mobile application that allows landowners to capture their efforts to plant trees and manage green carbon projects on their land. We are now in the process of beta testing the application with a number of landowners across Kenya and other geographies around the world.

Our application will be used by people around the world on a huge assortment of Android and iPhone devices, with a wide range of performance and capabilities. Especially in markets like East Africa, we have encountered many older and low-cost devices that present unique challenges for application development.

We have found Stego to be a very powerful testing tool for assisting us in the automation of testing across a broad range of devices. Additionally, the team at Apptest.ai has been super helpful and supportive of our efforts. We’ve sent them a number of devices that are common in Africa but uncommon elsewhere to add into their mobile device testing farm. Now our developers can perform automated testing from all around the world at any time.

While Stego’s AI capabilities allow it to easily generate test scenarios, we have found the most value in defining custom test cases to target specific areas of our application. In this example below, I’ve shared a scenario where testing fails so you can see more about how it works.

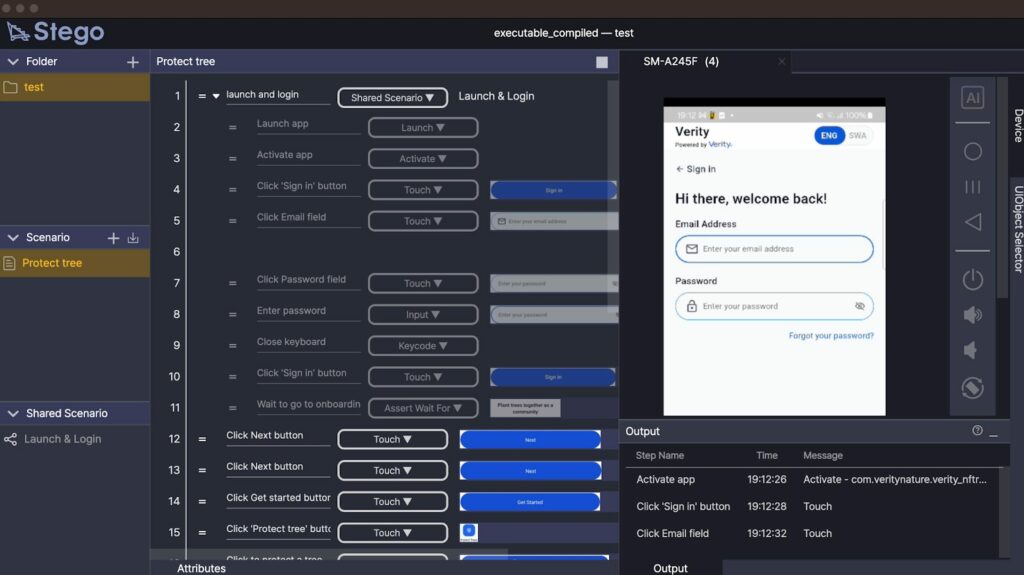

In Stego, you start by setting up testing scenarios. In the screenshots below, you can see each step of the scenario. I’m testing on an A24, one of the top-selling low-cost Samsung devices in Kenya. This device is connected to my laptop, and the live view of the screen is shown on the right side of the interface.

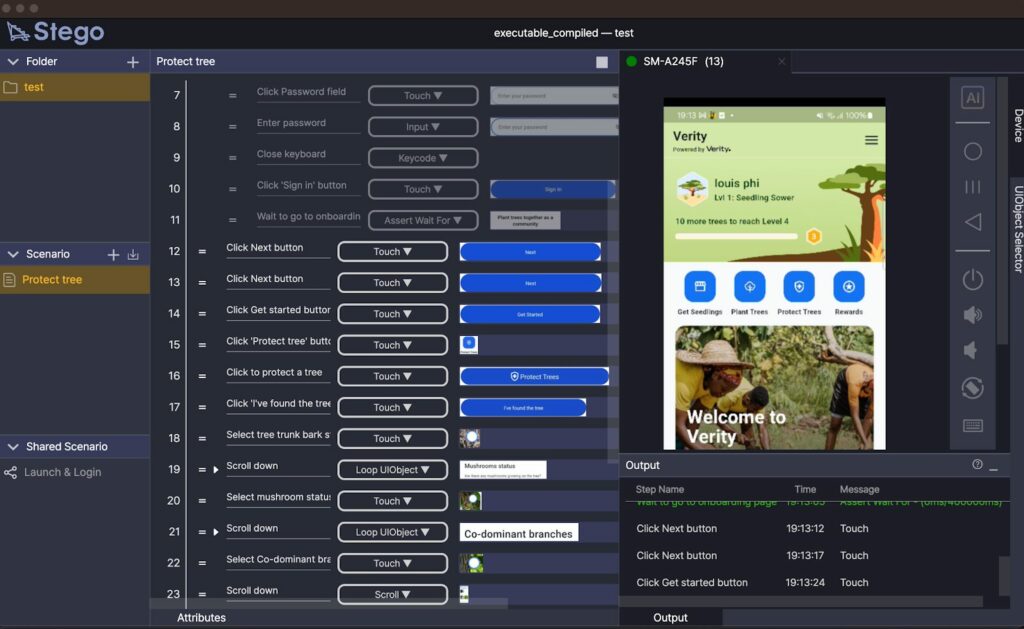

Stego automatically moves through each step of the testing scenario. Below, you can see that Stego has logged in with a username and password and is moving through the onboarding screens of the application.

Stego is device-independent, and it identifies the steps in the process by locating all of the active buttons and functions of the app, which is great since every device is a little different due to display height and width. Below, you can see that it’s looking for specific buttons to move through the test.

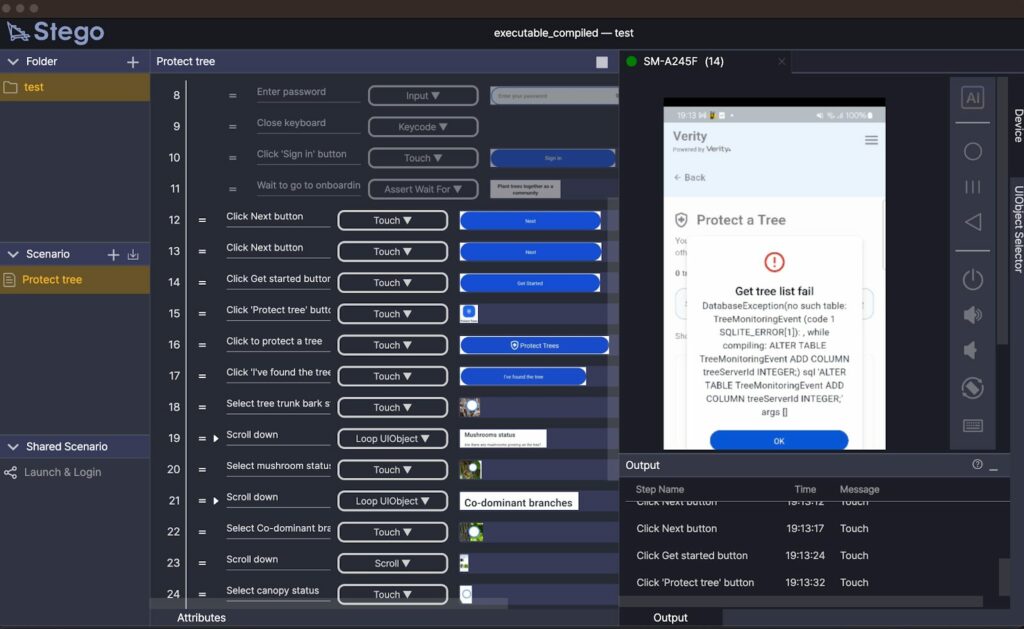

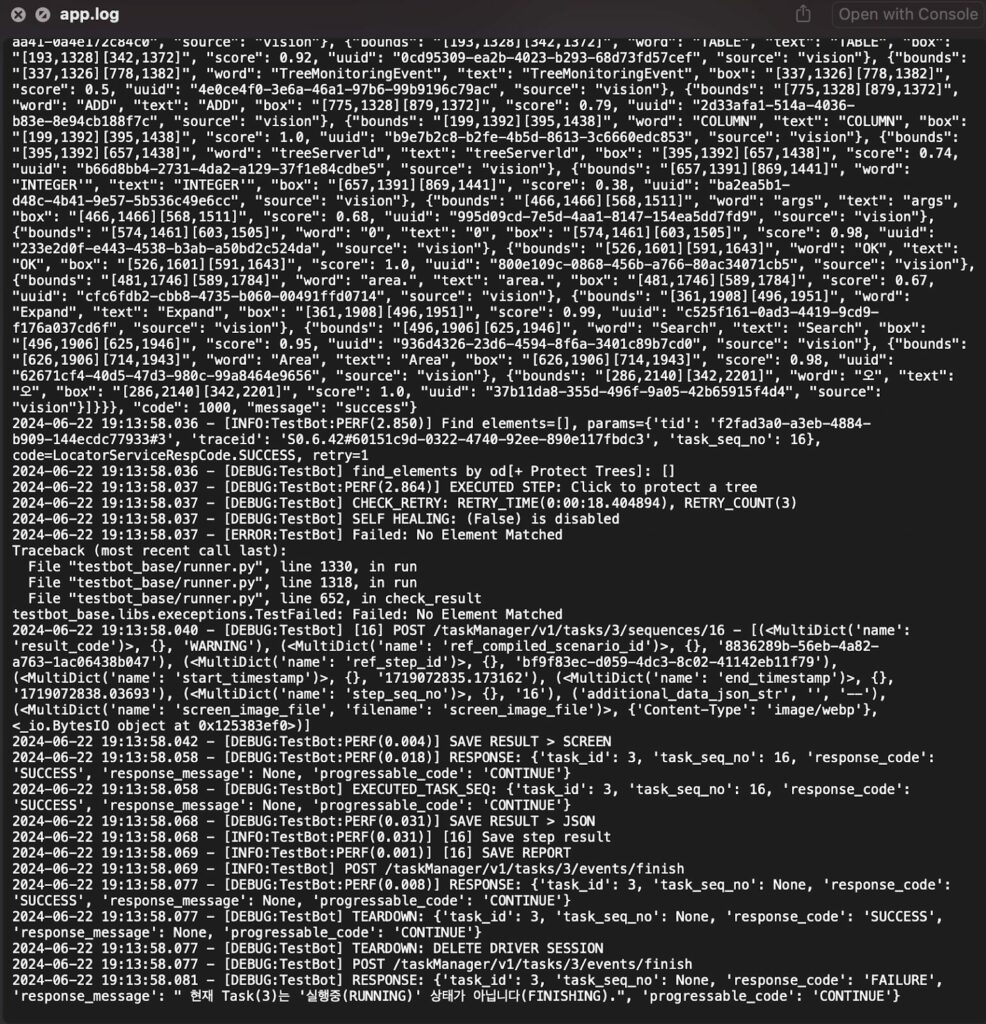

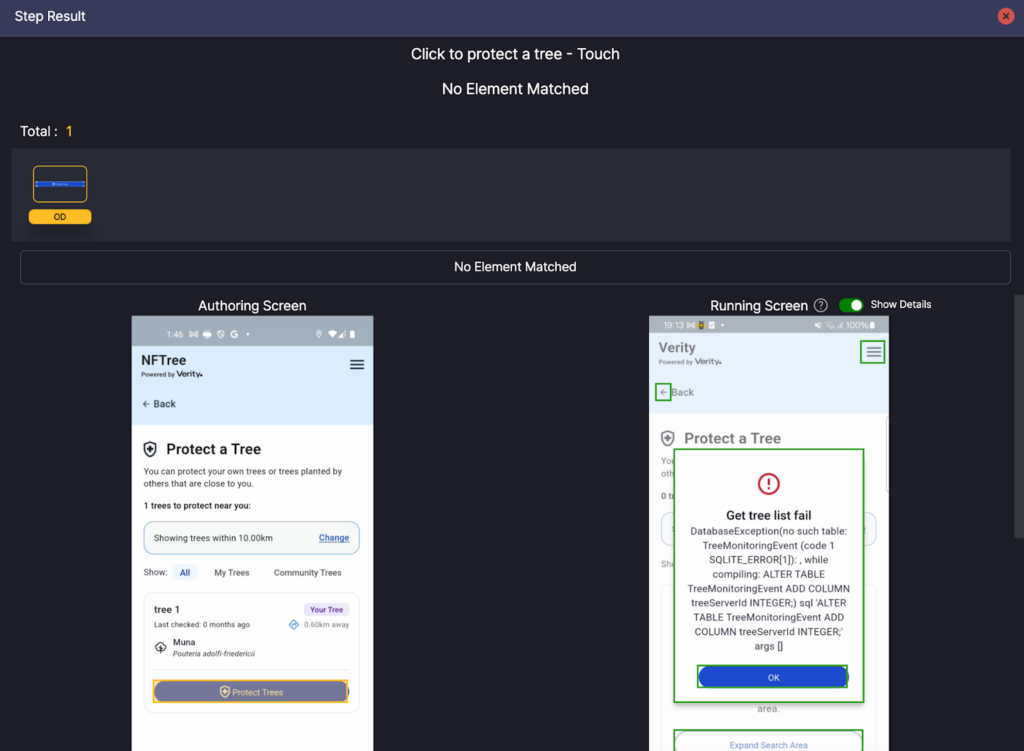

When Stego reaches an error, it retries a few times and will then throw an error. Below, there is an issue finding a component in the database, which is due to a revision mismatch between the application version and the backend API.

Detailed error logs are provided as part of the output, which can then be inspected and exported for further analysis.

Additionally, the Step Result view shows more information about the error.

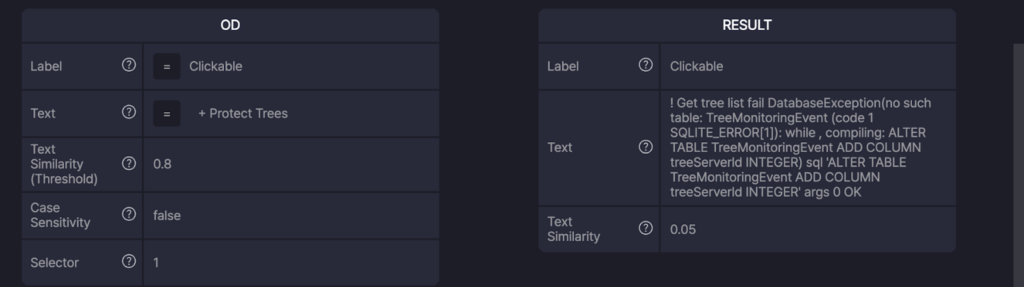

The results show further information about the error, and you can also see that Stego has been looking at the current view without finding a text match, with a text similarity result of 0.05, very far below the similarity threshold of 0.8.

We have found Stego to be beneficial by saving us time and effort, in its ability to scale across a wide variety of devices, and by lowering our costs through the reduction of manual testing. By integrating Stego into our development process, we have increased our test coverage and the quality of our applications. I’d recommend Stego to any company, big or small, looking to enhance their current testing workflow.

Keywords: mobile app testing, AI testing automation, cross-device testing, automated testing solutions, mobile app quality assurance, Africa mobile testing, Stego testing platform