Mobile App Testing:

Which Automation Method Should You Choose? Apptest.ai Practice Guide

How do you handle that final check before app release?

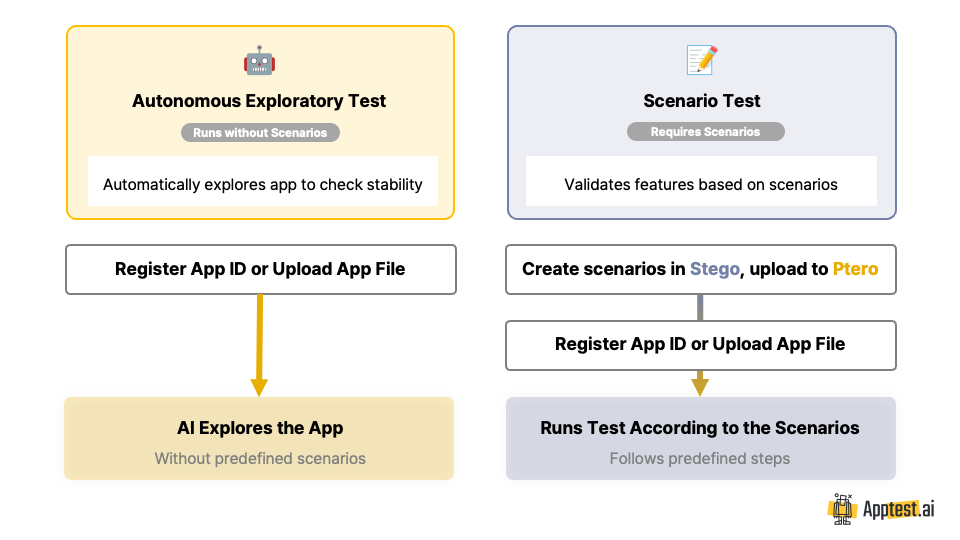

In our previous article “Autonomous Exploratory Testing vs. Scenario Testing: What’s the Difference?” we explored the key differences between these two testing approaches. But knowing the concepts and actually implementing them in real work scenarios? That’s a whole different challenge.

This guide breaks down when and how to use each testing method within Apptest.ai with concrete examples from real teams.

Autonomous Exploratory Testing: “Just Let it Run and See What Happens”

Perfect For These Scenarios

- Quick stability checks before release deadlines

- Time-crunched situations where writing scenarios isn’t feasible

- Bug hunting missions to catch unexpected issues

How It Actually Works

Think of it as having an AI intern who’s really curious about your app. No scripts, no predetermined paths—just pure exploration across every screen and feature they can find.

| Upload app → AI explores everything → Catches crashes instantly → Generates detailed report |

Real Team Examples

Shopping App QA Team

“We need to verify overall stability (including installation) in 30 minutes before our release window. No time for elaborate test planning.”

Startup Development Team

“After that major refactoring, we just want to make sure nothing’s crashing or force-closing unexpectedly.”

The Trade-offs

What you get:

- Zero prep time sanity check

- Catches edge cases you’d never think to test

- Finds those weird crashes that happen in unexpected user journeys

What you don’t get:

- Deep business logic validation or testing of specific user scenarios

Scenario Testing: “Feature Validation”

Perfect For These Scenarios

- Mission-critical user flows that absolutely cannot break

- Complex conditional testing with specific data sets or user states

- Ongoing monitoring of key features post-release

How It Actually Works

You define exactly what should happen, step by step. Write your scenarios in Stego using drag-and-drop simplicity, then let Ptero execute them precisely as specified.

| Build scenarios in Stego → Upload to Ptero → Run tests → Review comprehensive results |

Real Team Examples

Enterprise QA Team

“Payment processing across different platforms and payment methods—we need to test every possible combination systematically.”

User Journey Assurance Team

“Browse services → place order/booking → complete payment. This flow is our revenue lifeline and cannot fail.”

The Trade-offs

What you get:

- Laser focus on business-critical functionality

- Repeatable testing for consistent quality assurance

- Deep validation of complex user journeys

What you don’t get:

- Coverage of paths you haven’t explicitly defined

- Discovery of unexpected interaction patterns

Decision Framework: Which Automation Method?

| Your Situation | Go With | Why This Makes Sense |

|---|---|---|

| Release deadline approaching fast | Autonomous Exploratory | Get maximum coverage with minimal setup |

| Core business flows must work perfectly | Scenario Testing | Precise validation of what drives revenue |

| Just pushed major code changes | Autonomous Exploratory | Catch unexpected side effects quickly |

| Need regular quality monitoring | Scenario Testing | Consistent standards over time |

The Power Combo

Most successful teams don’t choose one or the other—they use both strategically:

- Autonomous exploratory for broad stability sweeps

- Scenario testing for deep validation of critical paths

This approach gives you both comprehensive coverage and focused precision where it matters most.

Pre-Flight Checklists

Before Running Autonomous Exploratory Tests

- ▢ Verify app installs and launches properly

- ▢ Set realistic time boundaries (typically 10-30 minutes)

- ▢ Confirm external services and APIs are operational

- ▢ Clear any test data that might interfere

Before Running Scenario Tests

- ▢ Map out your critical user journeys

- ▢ Prepare all necessary test data (accounts, sample products, etc.)

- ▢ Define clear success criteria for each step

- ▢ Complete scenario creation in Stego

- ▢ Validate scenarios work in your staging environment

The Bottom Line

App testing isn’t just about finding bugs—it’s about protecting user experience and business reliability. The question isn’t whether to test, but how to test most effectively with your available resources.

Apptest.ai‘s dual approach gives you flexibility to match your testing strategy to your actual business needs. Quick stability check before a hotfix? Autonomous exploratory has you covered. Critical payment flow validation? Scenario testing ensures precision.

What’s your next release looking like, and which approach fits your timeline?

Need help designing a testing strategy that fits your team’s workflow?

We’re here to help you figure out the right mix of testing approaches for your specific situation.